[ad_1]

GitHub has updated the AI model of Copilot, a programming assistant that generates real-time source code and feature recommendations in Visual Studio, and says it’s now safer and more powerful.

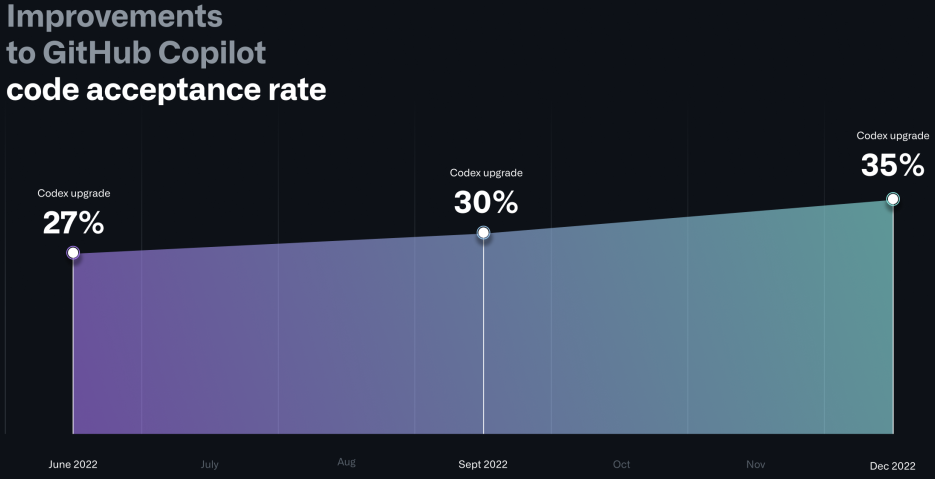

The company says the new AI model, which will roll out to users this week, delivers higher quality suggestions in a shorter time frame, further improving the efficiency of software developers using it by increasing the rate of feedback. ‘acceptance.

CoPilot will introduce a new paradigm called “Fill-In-the-Middle”, which uses a library of known code suffixes and leaves a gap for the AI tool to fill, thus achieving better relevance and consistency with the rest of the project code.

Additionally, GitHub updated CoPilot’s client to reduce spam suggestions by 4.5% to improve overall code acceptance rates.

“When we launched GitHub Copilot for individuals in June 2022, more than 27% of developer code files on average were generated by GitHub Copilot,” said Shuyin Zhao, Senior Director of Product Management. said.

“Today, GitHub Copilot is behind an average of 46% of a developer’s code across all programming languages, and in Java, that number jumps to 61%.”

More secure suggestions

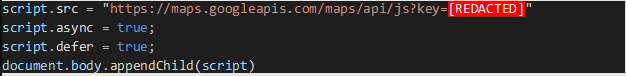

One of the major improvements in this CoPilot update is the introduction of a new security vulnerability filtering system that will help identify and block insecure suggestions such as hard-coded credentials, path injections and SQL injections.

“The new system leverages LLMs (large language models) to approximate the behavior of static analysis tools. And since GitHub Copilot runs advanced AI models on powerful compute resources, it is incredibly fast and can even detect vulnerable models in incomplete code fragments,” Zhao said. said.

“This means insecure coding patterns are quickly blocked and replaced with alternative suggestions.”

The software company says CoPilot can generate secrets such as keys, credentials and passwords seen in training data on new strings. However, these are not usable as they are entirely fictional and will be blocked by the new filtering system.

The appearance of these secrets in the CoPilot code suggestions has caused harsh criticism from the software developer community, with many accusing Microsoft of using large, publicly available datasets to train its AI models without concern for security, even including sets that mistakenly contain secrets.

By blocking dangerous suggestions in the real-time editor, GitHub could also provide some resistance against poisoned dataset attacks aimed at secretly training AI assistants to make suggestions containing malicious payloads.

At this time, CoPilot LLMs are still being trained to distinguish between vulnerable and non-vulnerable code models, so the performance of the AI model on this front should gradually improve in a near future.

[ad_2]

Source link