[ad_1]

The Federal Bureau of Investigation (FBI) warns of a growing trend of malicious actors creating deepfake content to carry out sextortion attacks.

Sextortion is a form of online blackmail where malicious actors threaten their targets by publicly disclosing explicit images and videos that they have stolen (hacking) or acquired (through coercion), usually demanding cash payments to retain equipment.

In many cases of sextortion, the compromising content is not real, with threat actors only pretending to have access to scare victims into paying for an extortion demand.

The FBI warns that sextortionists are now harvesting publicly available images of their targets, such as innocuous images and videos posted on social media platforms. These images are then fed into deepfake content creation tools that turn them into AI-generated sexually explicit content.

Although the images or videos produced are not authentic, they look very real, so they can serve the purpose of blackmailing the threat actor, because sending this material to the family, colleagues, etc. of the target could still cause serious personal and reputational damage to the victims.

“In April 2023, the FBI observed an increase in the number of sextortion victims reporting the use of fake images or videos created from content posted on their social media sites or web posts, provided to the malicious actor on request, or captured during video chats,” reads the alert published on the FBI’s IC3 portal.

“Based on recent victim reports, malicious actors have typically demanded: 1. Payment (e.g., money, gift cards) with threats to share the images or videos with members of the family or friends from social media if funds were not received; or 2. The victim sends real sexually themed images or videos.”

The FBI says creators of explicit content sometimes skip the extortion part and post the created videos directly to porn websites, exposing victims to large audiences without their knowledge or consent.

In some cases, sextortionists use these now public uploads to increase pressure on the victim, demanding payment to remove posted images/videos from the sites.

The FBI reports that this media manipulation activity has, unfortunately, also affected minors.

How to protect yourself

The speed at which AI-enabled content creation tools are becoming available to a wider audience is creating a hostile environment for all internet users, especially those in sensitive categories.

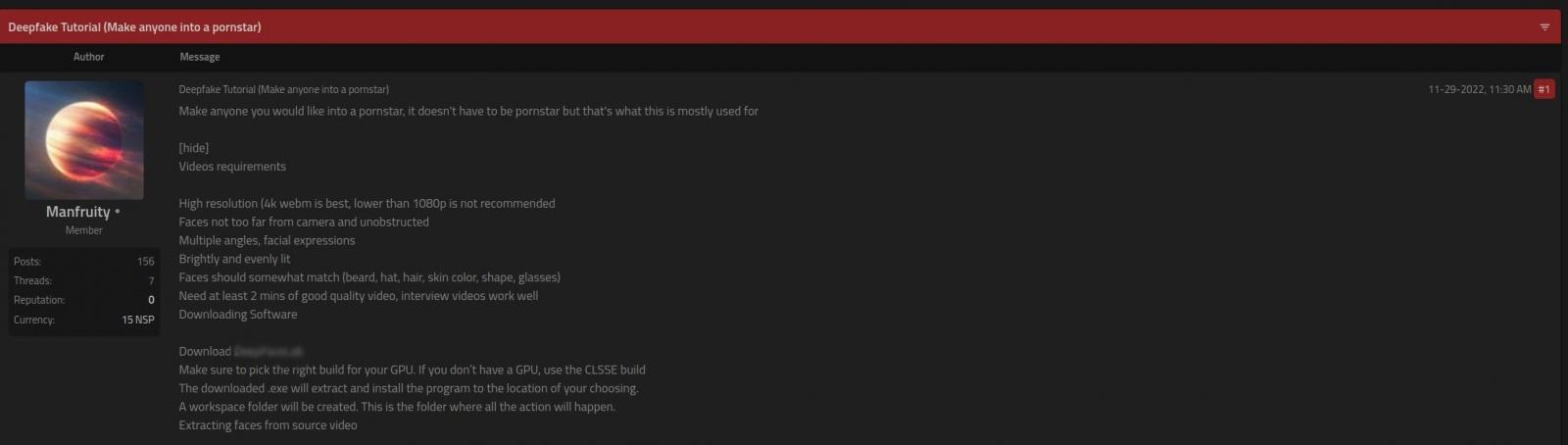

There are several content creation tools projects available for free via GitHubwhich can create realistic videos from a single image of the target’s face, without requiring additional training or data sets.

Many of these tools have built-in safeguards to prevent abuse, but not those sold on underground forums and dark web marketplaces.

Source: Kaspersky

The FBI recommends that parents monitor their children’s online activity and talk to them about the risks associated with sharing personal media online.

In addition, parents are advised to conduct online research to determine the degree of exposure of their children online and take necessary steps to remove the content.

Adults who post images or videos online should restrict access to a small, private circle of friends to reduce exposure. At the same time, children’s faces should always be blurred or masked.

Finally, if you discover deepfake content depicting you on porn sites, report it to the authorities and contact the hosting platform to request the removal of the offending media.

The UK has recently introduced a law in the form of an amendment to the Online Safety Bill that classifies the non-consensual sharing of deepfakes as a crime.

[ad_2]

Source link