[ad_1]

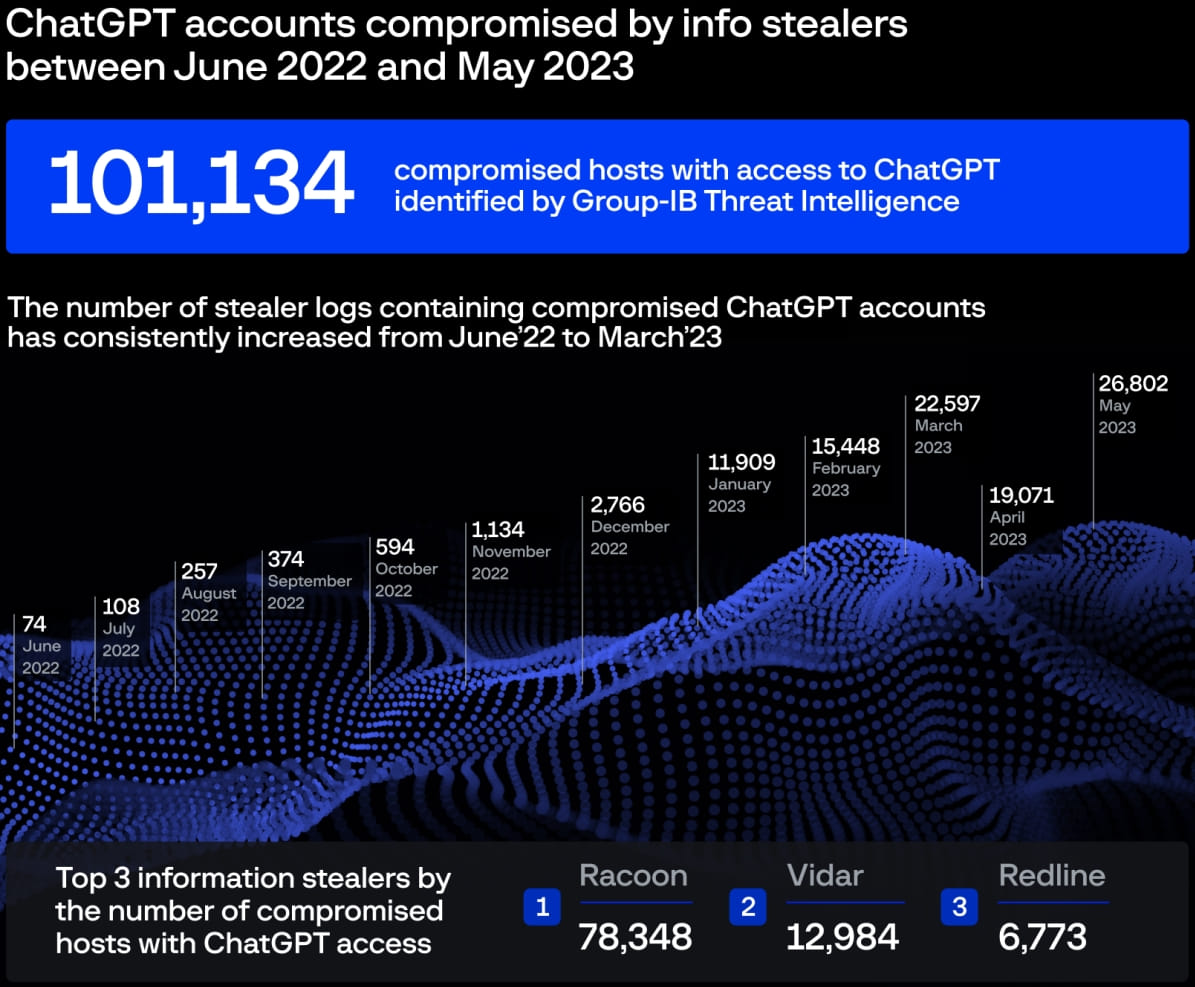

More than 101,000 ChatGPT user accounts have been stolen by info-stealing malware in the past year, according to dark web market data.

Cyberintelligence firm Group-IB reports having identified more than a hundred thousand logs of information thieves on various underground websites containing ChatGPT accounts, with a peak seen in May 2023, when threat actors released 26,800 new pairs ChatGPT credentials.

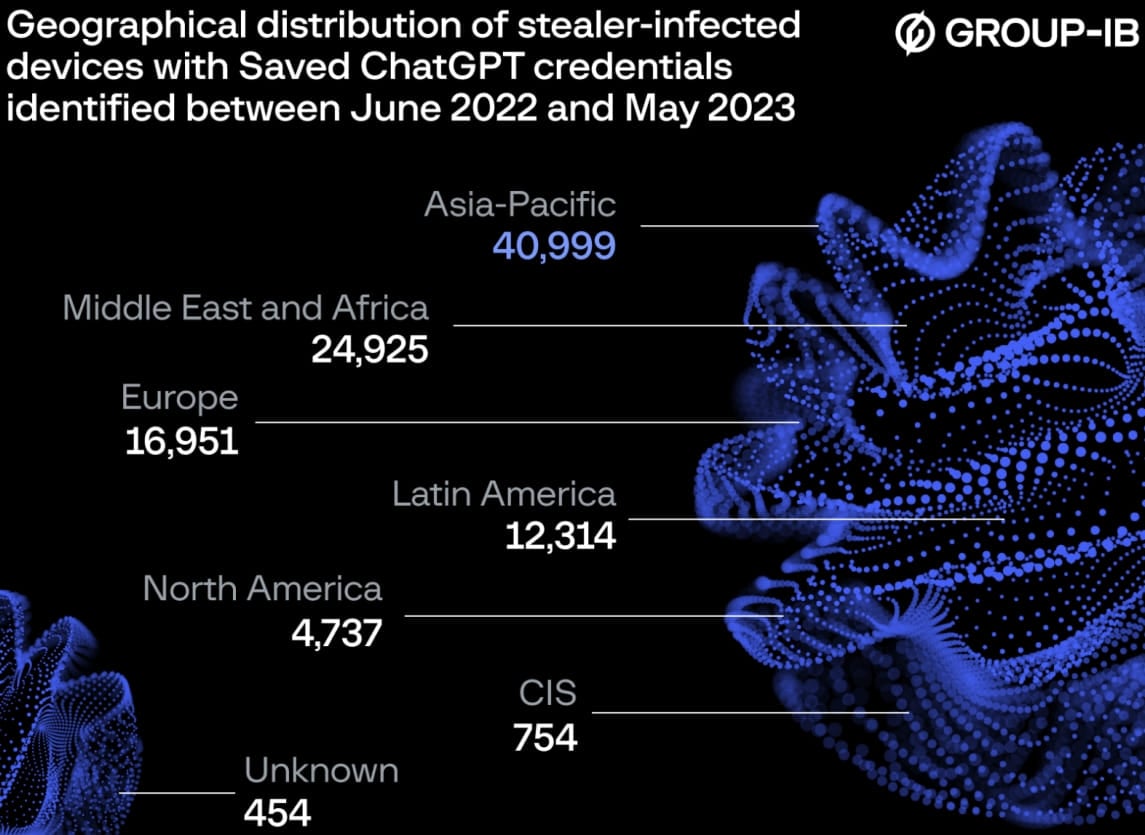

Regarding the most targeted region, Asia-Pacific had nearly 41,000 compromised accounts between June 2022 and May 2023, Europe had nearly 17,000, and North America ranked fifth with 4,700.

Information thieves are a category of malware that targets account data stored on applications such as email clients, web browsers, instant messengers, gaming services, cryptocurrency wallets, etc.

These types of malware are known to steal credentials saved in web browsers extracting them from the program’s SQLite database and abusing the CryptProtectData function to reverse the encryption of stored secrets.

These credentials, along with other stolen data, are then packaged into archives, called logs, and sent back to the attackers’ servers for recovery.

ChatGPT accounts, along with email accounts, credit card data, cryptocurrency wallet information, and other more traditionally targeted data types, signify the growing importance of AI-powered tools for users and businesses.

Since ChatGPT allows users to store conversations, accessing its account may mean gaining insight into proprietary information, internal business strategies, personal communications, software code, and more.

“Many companies integrate ChatGPT into their operational flow,” comments Dmitry Shestakov from Group-IB.

“Employees enter classified matches or use the bot to optimize proprietary code. Since ChatGPT’s standard configuration retains all conversations, this could inadvertently offer a wealth of sensitive information to threat actors if they get account credentials.”

It is because of these concerns that tech giants like Samsung have outright prohibit staff from using ChatGPT on work computers, going so far as to threaten to terminate the employment of those who do not comply with the policy.

Data from Group-IB indicates that the number of stolen ChatGPT logs has increased steadily over time, with almost 80% of all logs coming from thief Raccoon, followed by Vidar (13%) and Redline (7%) .

If you enter sensitive data on ChatGPT, consider disabling the chat recording feature in the platform’s settings menu or manually deleting those conversations as soon as you’re done using the tool.

However, it should be noted that many information thieves take screenshots of the infected system or perform keylogging, so even if you don’t log conversations to your ChatGPT account, the software infection malware can still lead to data leakage.

Unfortunately, ChatGPT has already suffered a data breach where users have seen other users’ personal information and chat requests.

Therefore, those working with extremely sensitive information should not rely on their input on cloud-based services, but only on locally built and self-hosted secure tools.

[ad_2]

Source link