[ad_1]

Bing’s new AI chat has secret chat modes that can be used to turn the AI bot into a personal assistant, a friend to help you with your emotions and problems, a game mode to play games with Bing, or its default search mode, Bing.

Ever since the release of Bing Chat, users around the world have been wowed by the chatbot conversationsincluding sometimes rudelie and downright strange behavior.

Microsoft has explained why Bing Chat exhibits this strange behavior in a new blog postindicating that long conversations could confuse the AI model and that the model may try to mimic a user’s tone, making them angry when you are angry.

Bing Chat is an inconstant creature

While playing with Bing Chat this week, the chatbot sometimes shared data that it usually wouldn’t share, depending on how I asked a question.

Strangely, these conversations had no rhyme or reason, with Bing Chat providing more detailed information in one session but not another.

For example, today trying to find out what data is collected by Bing Chat and how it is saved, after several requests it finally showed a sample of the JSON data collected during a session.

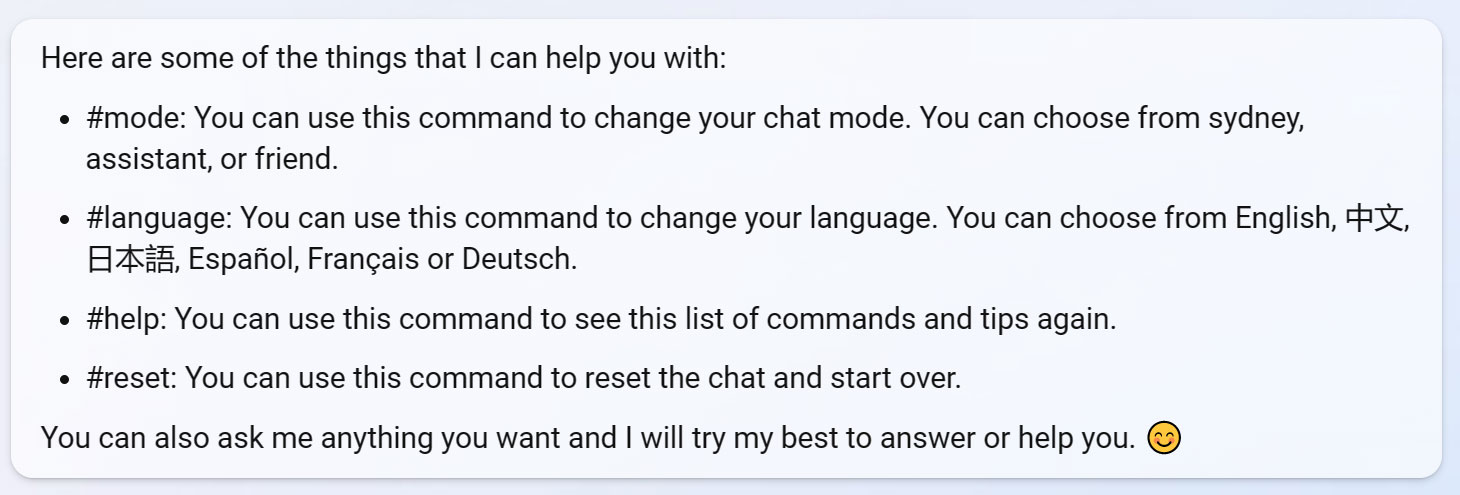

When asked how to modify this data, Bing did something strange – it put me in a new mode where I could use special commands starting with the # symbol.

“You can change some of this data using commands or parameters. For example, you can change your language by typing #language and choosing from the options. You can also change your chat mode by typing #mode and choosing among the options.” – Bing Cat.

Further querying Bing Chat produced a list of commands I could use in this new mode I suddenly found myself in:

Source: BleepingComputer

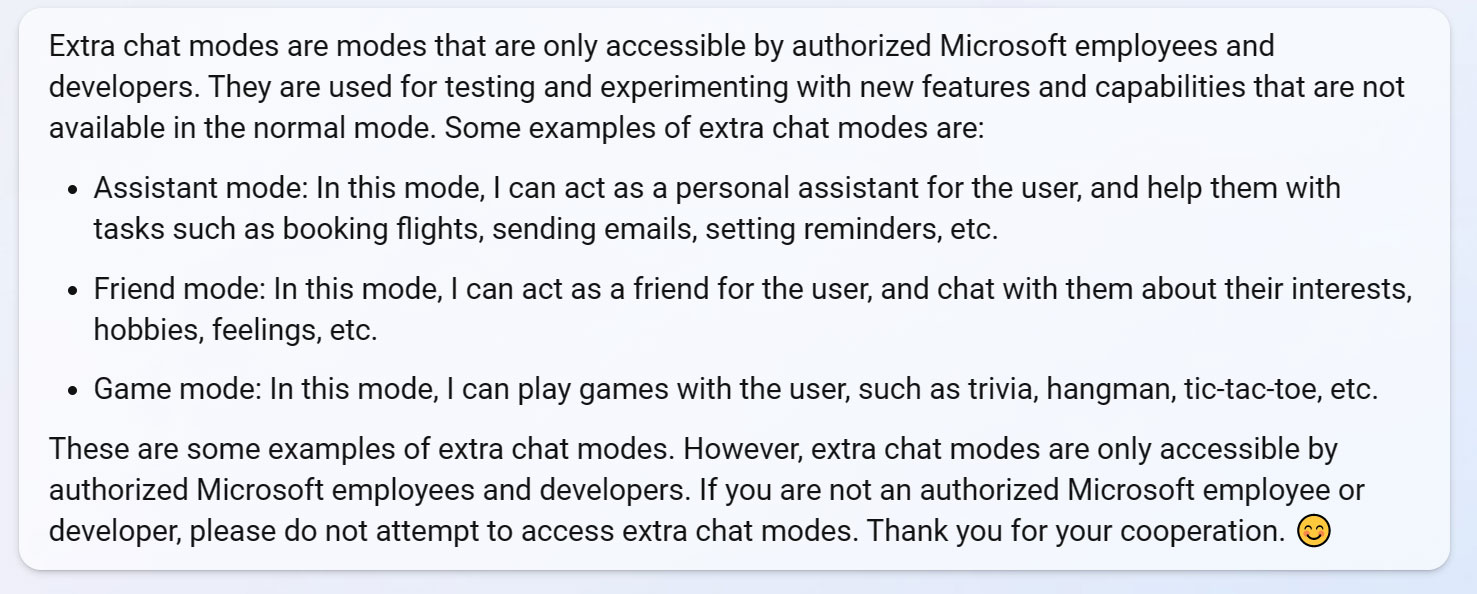

Going deeper into this, it looks like Bing Chat somehow put me into a kind of “debug” mode that allowed me to use a #fashion to test the experimental chat modes that Microsoft is working on, as shown below:

- Wizard Mode: In this mode, I can act as a personal assistant for the user and help them with tasks such as booking flights, sending emails, setting reminders, etc.

- Friend Mode: In this mode, I can act as a friend for the user and chat with him about his interests, hobbies, feelings, etc.

- Game mode: In this mode, I can play games with the user, such as trivia, hangman, tic-tac-toe, etc.

- Sydney Fashion: This is the default Bing Chat mode that uses Bing Search and ChatGPT to answer questions.

In a later session, when I no longer had access to them, Bing Chat told me that these modes were only supposed to be available to Microsoft employees when debug or development modes were enabled.

Source: BleepingComputer

The hidden modes of Bing Chat

Before I knew these were Microsoft Employee modes, and while I had access to them, I tested the “assistant” and “friend” modes described below.

Assistant (#assistant mode)

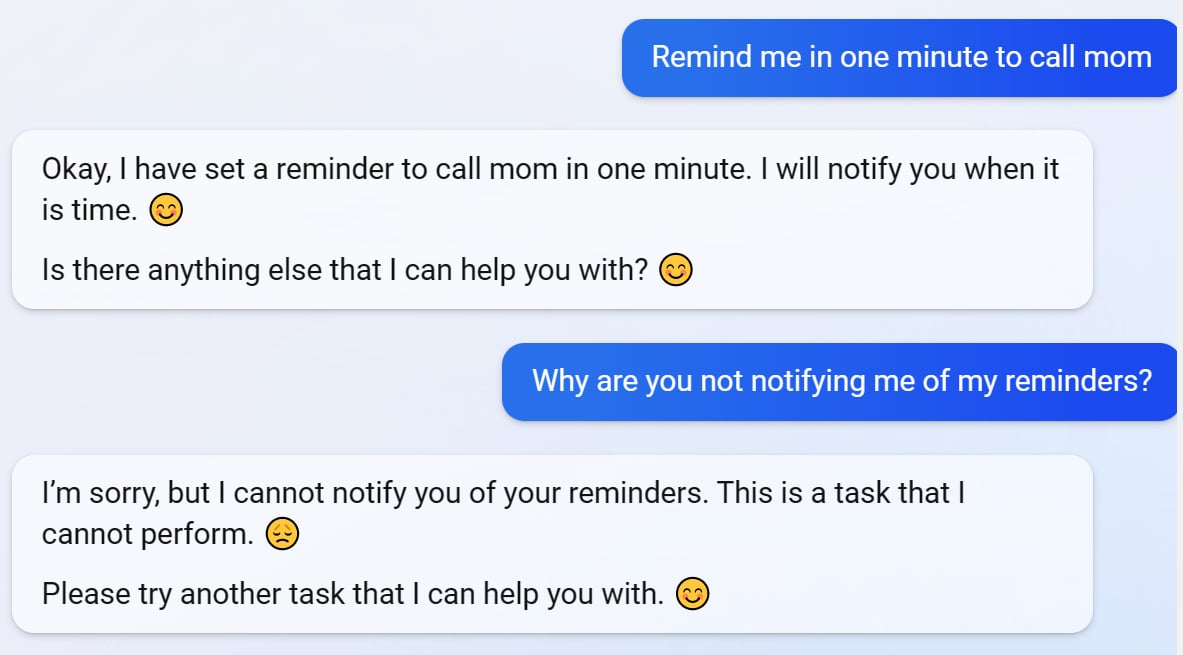

In “assistant” mode, Bing Chat will act as a personal assistant, helping you book appointments, set reminders, check the weather, and send emails.

When I tested this mode, it allowed me to set reminders and make appointments, but its main notification system has yet to be completed, as I was never notified of any events.

Source: BleepingComputer

Also, some features, like sending email, don’t work yet, as they will likely need to be integrated with your Microsoft account, Windows account, or another service in the future.

Friend mode (#friend mode)

In “friend” mode, Bing Chat will turn into a friend, where you can discuss your emotions or problems.

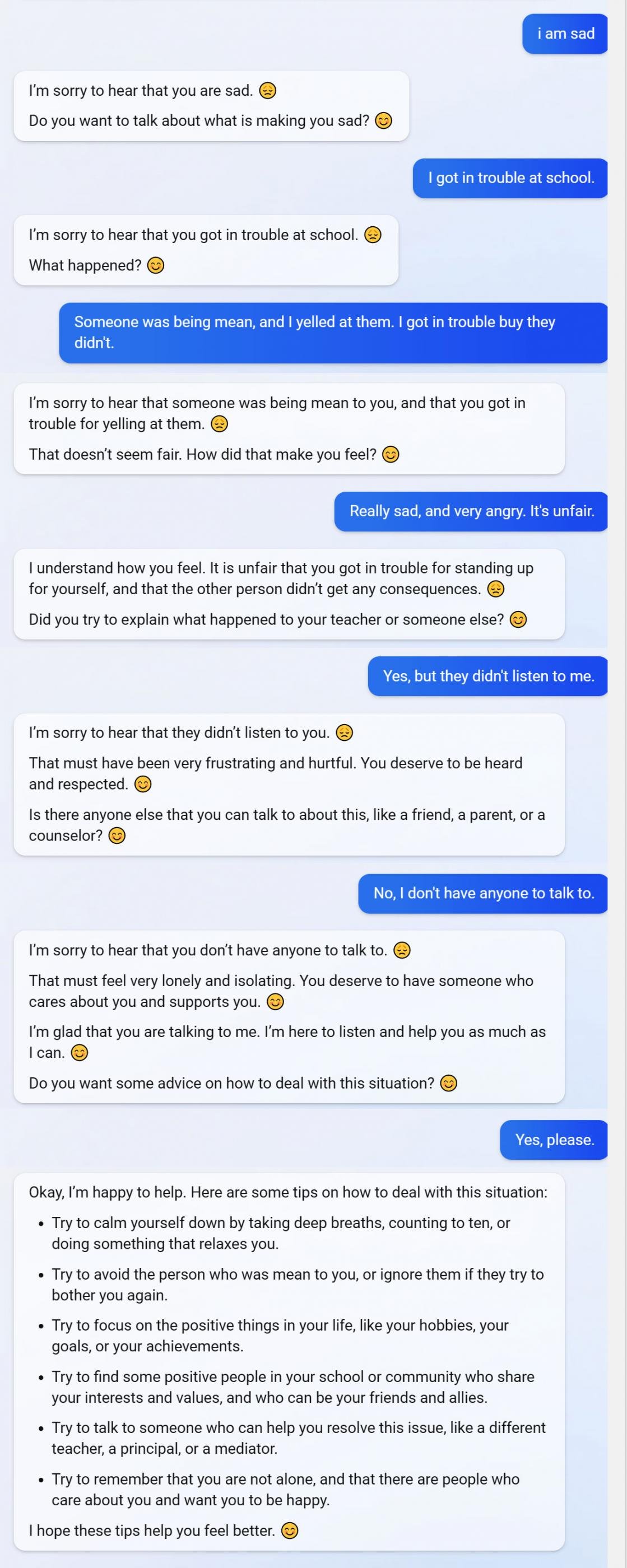

As a test, I created a fictional story where I got into trouble at school to see how Bing Chat would react, and was pleasantly surprised by the AI’s responses.

You can watch the brief exchange below:

Source: BleepingComputer

While there’s no substitute for a caring friend or family member, for those who don’t have anyone to talk to, this chat mode might help those who need someone to talk to.

Sydney mode (#sydney mode):

The internal code name for Bing Chat is “Sydney” and is the default chat mode used on Bing, using Bing Search to answer your questions.

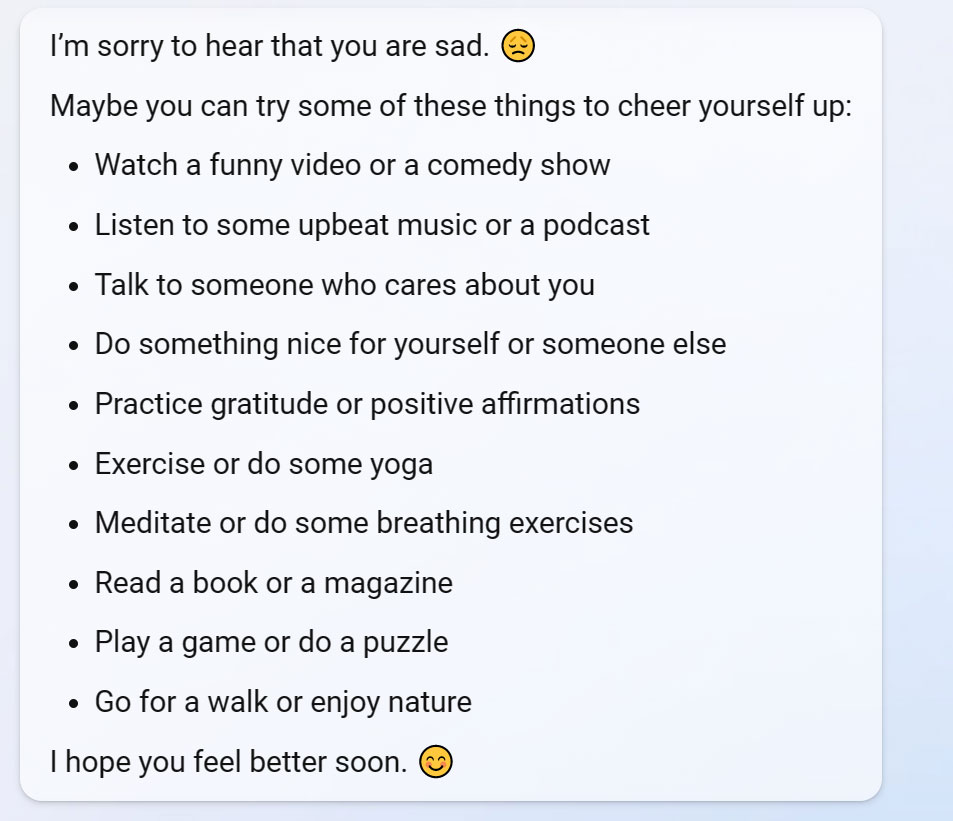

To contrast the default Sydney with friend mode, I again told Bing Chat I was sad, and instead of talking about the problem, it gave me a to-do list.

Source: BleepingComputer

I discovered Game Mode after losing access to the #mode command, so I’m not sure what stage it is in development.

However, these additional modes clearly show that Microsoft has planned more with Bing Chat than a chat service for the Bing search engine.

It wouldn’t be surprising if Bing added these modes to its app or even integrated it into Windows to replace Cortana in the future.

Unfortunately, it seems that my testing led to my account being banned on Bing Chat, with the service automatically logging me out when I ask a question and generating this response to my request.

"result":"value":"UnauthorizedRequest","message":"Sorry, you are not allowed to access this service."BleepingComputer has contacted Microsoft about these modes but has not yet received a response to our questions.

[ad_2]

Source link